User research has a timing problem. You need answers before your sprint ends, but traditional methods operate on a different calendar entirely. Participant recruitment alone can eat three weeks. Then comes scheduling across time zones, coordinating incentives, and managing no-shows. By the time you gather feedback, your development team has either moved forward with assumptions or stalled waiting for data that arrives too late.

We built Evelance because we kept watching this same pattern repeat across product teams, UX researchers, and design professionals. The research question was ready on Monday. The answer arrived a month later. That gap between question and insight is where good product decisions go to die.

Dscout has served teams well for capturing rich qualitative feedback through diary studies and video responses. The platform excels at collecting authentic, in-the-moment data from real users. But when your budget starts at $51,163 annually, and your timeline starts at several weeks, smaller teams and faster-moving projects need different options.

Here we look at 9 Dscout alternatives that approach user research from different angles. Some prioritize traditional testing methods with large participant pools. Others focus on specific research types like information architecture or behavioral analytics. One takes a fundamentally different approach by using predictive models to deliver insights in minutes rather than weeks.

1. Evelance: Predictive User Research in Minutes

At Evelance, we took the user research process and asked a different question. Instead of asking how to recruit participants faster, we asked what would happen if you could skip recruitment entirely.

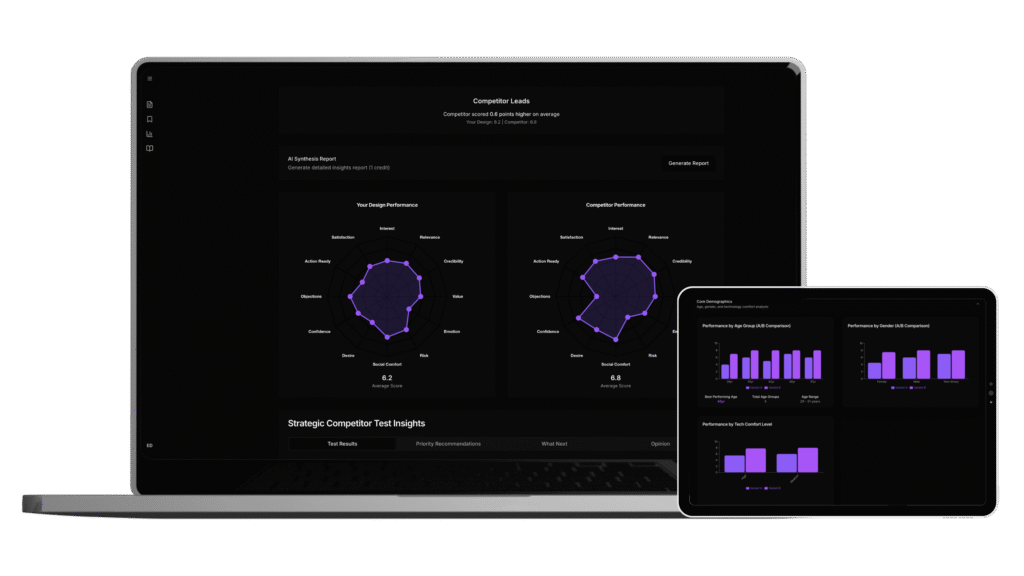

Our platform uses predictive personas built from behavioral data to simulate user reactions. You upload a design, prototype, or live website URL, and within minutes, you receive feedback from audiences that match your target segments. No outreach. No scheduling. No waiting for time zones to align.

What This Means for Your Workflow

Tests complete in minutes. When your development team needs feedback before committing resources, you provide it the same day. Research stays on the same timeline as a two-week sprint because validation no longer requires a separate, slower calendar.

The platform provides access to over 1.8 million personas. You can target users by life context, job type, technology comfort, and behavioral patterns. Consumer segments. Professional profiles. Specific demographics. The targeting precision matches what you would specify in a traditional screener, but without waiting for qualified participants to respond.

Psychological Depth Beyond Surface Feedback

Traditional testing tells you what happened. Someone abandoned the pricing page. Conversion dropped. Users hesitated at the signup form.

Evelance tells you why. Results include scores across 12 psychological dimensions: Interest Activation, Relevance Recognition, Credibility Assessment, Value Perception, Emotional Connection, Risk Evaluation, Social Acceptability, Desire Creation, Confidence Building, Objection Level, Action Readiness, and Satisfaction Prediction.

When you see that Value Perception dropped despite adding more feature detail, you understand the problem differently than when you simply see a lower conversion rate. When you measure that Action Readiness decreased for a specific persona, you know where to focus design changes.

Flexible Testing Options

You can test single designs, compare two variants side by side, or benchmark against competitors. The platform accepts live websites through URL, PNGs, mobile app screens, and presentation files. A trial gives you 5 days of access to test your own projects with full access to predictive personas and psychology scoring.

The Bottom Line

For teams where recruitment timing and budget constraints create research bottlenecks, Evelance removes those barriers. You validate concepts before building them. You iterate before launching. You make decisions backed by psychological insights rather than assumptions about what users might think.

2. UserTesting: Enterprise-Scale Participant Access

UserTesting positions itself as the enterprise solution for organizations needing extensive participant pools and comprehensive testing options.

Platform Capabilities

The platform offers both moderated and unmoderated testing methods with access to over 2 million active participants. This pool size means recruitment happens faster than building your own panel from scratch.

Enterprise features include team collaboration tools, results sharing, and integration with product management workflows.

Pricing Reality

Pricing varies considerably based on users, features, and negotiation outcomes. According to Userlytics research, annual costs can range from $16,900 to $136,800 depending on the chosen plan.

A typical 5-seat Advanced plan with 100 session units lists at $49,711. Small teams with strong negotiation skills report paying around $25,700 for similar configurations.

User Feedback

TrustRadius reviews note that seat license costs run high. Capterra reviews mention that some responses can feel hurried or low-effort unless you carefully vet participants. This quality variance requires additional time investment to filter results.

3. Maze: Design Tool Integration

Maze focuses on product discovery and design validation with tight integration into popular design tools.

Integration Advantages

Direct connections with Figma, Adobe XD, and Sketch allow you to test prototypes, wireframes, and designs without complex setup or export processes. The workflow feels native to design-first teams.

The platform supports multiple research methods: usability testing, surveys, interviews, card sorting, tree testing, and prototype validation.

Pricing Tiers

Maze offers a free plan with 1 study per month, 5 seats, and basic features. The Starter Plan costs $99 per month or $1,188 annually, designed for small teams beginning their research practice.

The gap between tiers presents a challenge. The Starter Plan restricts you to one study monthly, while the Organization Plan starts at $15,000 per year. Teams in the middle ground may find neither tier fits their needs well.

Best Fit

Maze works well for design teams already embedded in Figma workflows who want research integrated into their existing tool ecosystem.

4. Hotjar: Behavioral Analytics Focus

Hotjar takes a different approach centered on behavioral analytics for live websites.

What Hotjar Does Well

The platform excels at showing what users actually do on your website. Heatmaps reveal where clicks concentrate. Session recordings show individual user journeys. Feedback widgets collect user opinions at specific moments.

This observational data complements traditional testing by revealing behavior patterns across your entire user base rather than a recruited sample.

Pricing Options

The basic plan is free with limited features. Plus plans start at $39 per month. Business and Scale plans offer advanced capabilities with custom pricing, starting around $32 monthly for entry-level options.

Scope Limitations

Hotjar works only on websites. Mobile apps and prototypes fall outside its capabilities. The platform also does not offer the variety of testing types that UX researchers and designers often need, such as card sorting, tree testing, or moderated interviews.

Teams wanting to test designs before development or evaluate mobile applications need additional tools.

5. Lyssna: Traditional Usability Testing

Lyssna, formerly known as UsabilityHub, offers traditional usability testing methods with access to a substantial participant panel.

Research Panel Access

The platform provides recruitment from a panel of over 690,000 participants across 124 countries with 395+ demographic attributes. This global reach helps when testing with specific international segments.

Pricing Model

The structure splits between subscription and panel costs. Basic plans start at $75 monthly with limited features. Pro plans run $175 monthly.

Panel recruitment adds separate charges calculated at $1 per participant per minute of test length. A 5-minute test with 20 participants costs $100 in panel fees on top of your subscription. This variable component makes budgeting more complex.

Trade-offs

Users note a confusing pricing model that requires careful calculation. The platform also lacks AI functionality and moderated testing features that some teams require.

6. Optimal Workshop: Information Architecture Specialist

Optimal Workshop specializes in information architecture research for teams focused on navigation and content organization.

Specialized Tools

The platform offers tools specifically designed for testing how users understand your site structure. Card sorting reveals how users group and categorize content. Tree testing shows whether users can find items within your navigation hierarchy. These specialized methods address questions that general usability testing handles less effectively.

Pricing Changes

Paid plans start at $129 per month. The company discontinued their free plan in April 2024 for new customers, removing a previous entry point for teams wanting to evaluate the platform.

Best Use Cases

Teams specifically concerned with information architecture, website reorganization, or navigation redesign will find focused tools here. General usability testing needs may be better served elsewhere.

7. UXtweak: Comprehensive Testing Suite

UXtweak provides a comprehensive usability testing suite with behavior analytics built in.

Feature Range

The platform includes tree testing, first-click testing, 5-second tests, and mobile testing within a single platform. This range covers most common research methods without requiring multiple tool subscriptions.

Onsite respondent recruitment and behavior analytics round out the offering.

Pricing and Perception

Plans start at 49 euros per month. Based on Capterra reviews, UXtweak scores 4.8 out of 5, the highest among traditional testing platforms in comparison studies.

Value Proposition

UXtweak positions itself as a practical choice for UX professionals who need multiple testing methods at accessible price points. The all-in-one approach reduces tool sprawl for teams with varied research needs.

8. Lookback: Moderated Sessions Focus

Lookback focuses specifically on moderated testing and interviews with strong team collaboration features.

Moderated Research Strength

If your research program relies heavily on interviews and moderated sessions where researchers interact directly with participants, Lookback provides a centralized environment for this work. Team collaboration features help when multiple researchers need to access and analyze the same sessions.

Pricing Structure

No free plan exists, but a 60-day trial lets you evaluate the platform. After trial, prices start at $25 per month with a limit of 10 sessions per year. The professional Insights Hub plan costs $344 per month with annual billing.

Limitations

The platform lacks options for unmoderated testing. Teams needing both moderated and unmoderated capabilities will need additional tools. Lookback also lacks a built-in participant panel, requiring external recruitment.

9. Userlytics: Scalable Testing Plans

Userlytics offers scalable testing plans designed to grow with your research needs.

Plan Structure

The platform offers tiered pricing with the Premium plan at $499 per month and the Advanced plan at $999 per month. This structure lets teams start at one level and expand as research programs mature.

Positioning

Userlytics serves teams wanting flexibility in their testing volume without committing to enterprise-level contracts. The monthly pricing model suits organizations with variable research needs throughout the year.

What is the Best Alternative to Dscout?

After evaluating these 9 platforms, Evelance emerges as the best alternative to Dscout for teams where recruitment timelines and budget constraints create research bottlenecks. Traditional platforms optimize around the same fundamental model: recruit participants, schedule sessions, collect feedback. Evelance removes that model entirely and replaces it with predictive validation that delivers results in minutes.

If Recruitment Timing Slows Your Process

When development stalls waiting for participant feedback, speed becomes the priority. Traditional platforms still require recruitment time regardless of how efficient their participant panels become. Evelance eliminates this timeline entirely. No outreach. No scheduling. No waiting for time zones to align.

If Budget Constrains Your Options

Annual costs exceeding $50,000 for traditional enterprise solutions represent a significant commitment for growing teams. Evelance starts at $399 per month letting you run multiple tests without the budget approval cycles that enterprise contracts require.

If You Need Psychological Depth

Standard usability testing catches confusion and friction. Researchers watch users struggle and document where problems occur. This observation tells you something went wrong.

Predictive psychological scoring tells you why. When you can measure Value Perception changes, Action Readiness shifts, and Objection Level increases across different personas, you understand problems at a level that enables better solutions.

If You Need Audience Precision

Traditional recruitment delivers whoever responds to your screener. Evelance provides access to over 1.8 million predictive personas with targeting across job type, life context, technology comfort, and behavioral patterns. You test with the specific audience segment you actually need rather than a general approximation.

Making Research Continuous Rather Than Gated

Traditional research operates at predetermined gates. You plan a study, recruit participants, run sessions, analyze results, and deliver findings. This process suits milestone-based decisions but struggles with the pace of modern product development.

When validation takes minutes rather than weeks, research transforms into something you do continuously. You test an idea on Tuesday morning and iterate by Tuesday afternoon. You validate a concept before committing development resources rather than discovering problems after building.

Each platform on this list serves legitimate research needs.

The choice depends on what limits your research most severely. For teams where recruitment timing and budget constraints create the primary bottlenecks, predictive audience models offer a fundamentally different path forward. You ask questions and receive answers on the same timeline that product decisions actually require.

Dec 10,2025

Dec 10,2025