Product teams testing new designs face a practical problem that costs real money. You spend weeks scheduling interviews, recruiting participants, and analyzing results before you know if your checkout flow actually works. By then, engineering has already started building features that might need complete redesigns. This timing gap between design creation and user validation creates expensive rework cycles that push launch dates back by months.

Both predictive user research and synthetic users promise to compress these validation cycles. But here’s where teams get stuck: synthetic users sound advanced on paper, yet they often produce feedback that reads like marketing copy rather than actual human reactions. Meanwhile, predictive user research delivers specific psychological scores and behavioral insights grounded in real demographic data, which brings us to an uncomfortable truth about synthetic users that vendors rarely mention.

The Core Technology Behind Each Approach

Synthetic users rely on language models trained to generate plausible responses. These systems create fictional personas that answer questions about your product based on patterns they’ve learned from text data. The technology works by predicting what someone might say given certain demographic parameters. You input that you want feedback from a “35-year-old marketing manager” and the system generates responses that sound appropriate for that profile.

This approach hits limitations quickly. Language models excel at producing grammatically correct sentences that match expected patterns. They struggle to capture the messy reality of human decision-making, where a marketing manager might reject your product because the color scheme reminds them of their previous employer’s failed rebrand, or where financial stress from a recent car repair makes them hypersensitive to subscription pricing. These contextual factors shape real purchase decisions but rarely appear in synthetic user feedback.

Predictive user research takes a fundamentally different approach. Instead of generating text responses, it measures psychological reactions across specific dimensions like credibility assessment, value perception, and risk evaluation. The system draws from extensive demographic and behavioral data to model how actual population segments respond to design elements. A predictive model analyzing your pricing page doesn’t generate generic statements about “value for money” but instead produces numerical scores showing that users aged 40-55 with household incomes between $75,000 and $100,000 score your credibility at 4.2 out of 10 because your security badges appear below the fold.

The technical architecture matters here. Predictive systems like Evelance’s Dynamic Response Core factor in situational variables that synthetic users miss entirely. Time pressure changes how people evaluate options. Recent online shopping experiences affect trust thresholds. Even physical settings like background noise influence attention patterns. Predictive models incorporate these factors through behavioral attribution systems that connect personal traits with environmental conditions. A synthetic user gives you the same response regardless of context. A predictive model adjusts based on realistic scenarios.

Speed and Scale Differences That Matter

The promise of synthetic users centers on unlimited scale. You can theoretically generate thousands of responses within minutes. This sounds impressive until you examine what those responses contain. Most synthetic user platforms produce variations on similar themes: “I find this interface intuitive” or “The navigation could be clearer” repeated across hundreds of generated personas. The volume creates an illusion of comprehensive feedback while delivering limited actionable insights.

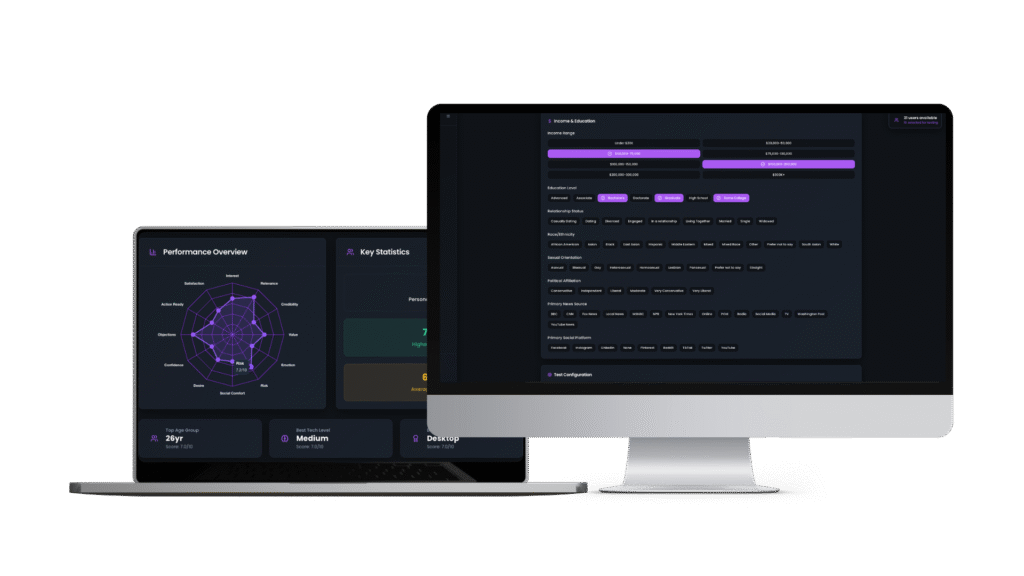

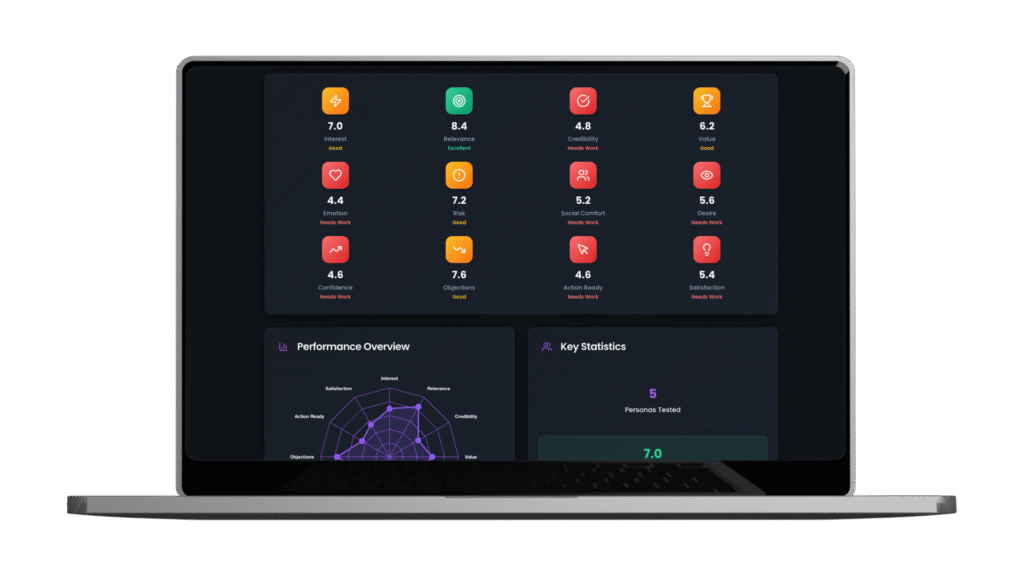

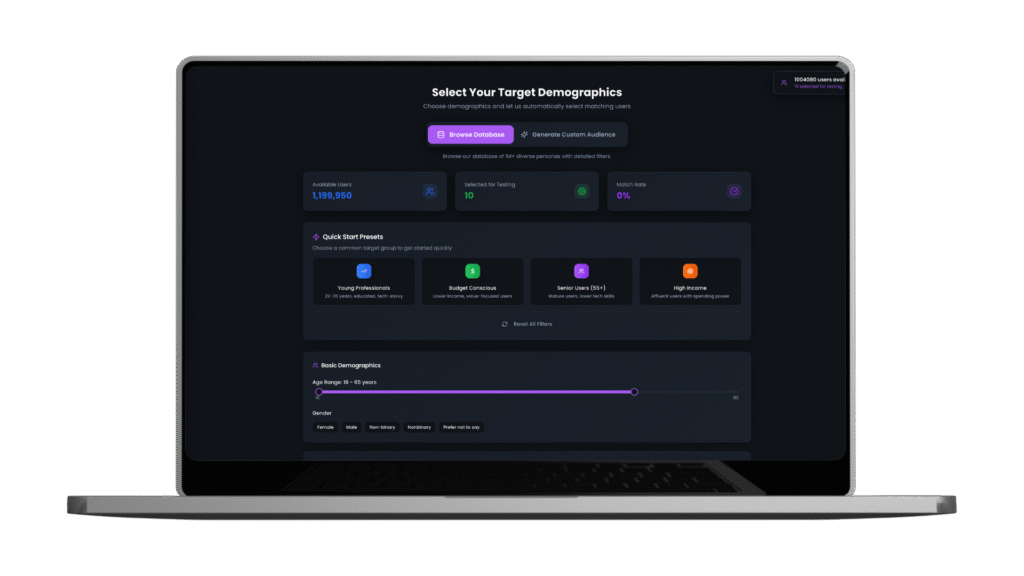

Predictive user research operates differently with scale. Instead of generating more responses, it provides deeper analysis across defined psychological dimensions. Evelance’s platform measures thirteen specific scores for each predictive audience model, from Interest Activation to Satisfaction Prediction. Running a test with 20 predictive models gives you 260 data points about how your design performs, each tied to specific demographic and behavioral attributes. You learn that women aged 28-35 in urban areas score your checkout flow 7.8 on Confidence Building but only 5.2 on Risk Evaluation, pointing to specific trust concerns during payment processing.

The speed advantage becomes clearer when you consider iteration cycles. Synthetic users require prompt engineering to generate useful feedback. You write questions, generate responses, realize the feedback lacks specificity, rewrite prompts, and generate again. This trial-and-error process often takes longer than the actual response generation. Teams report spending days tweaking prompts to get synthetic users to produce actionable feedback about specific design elements.

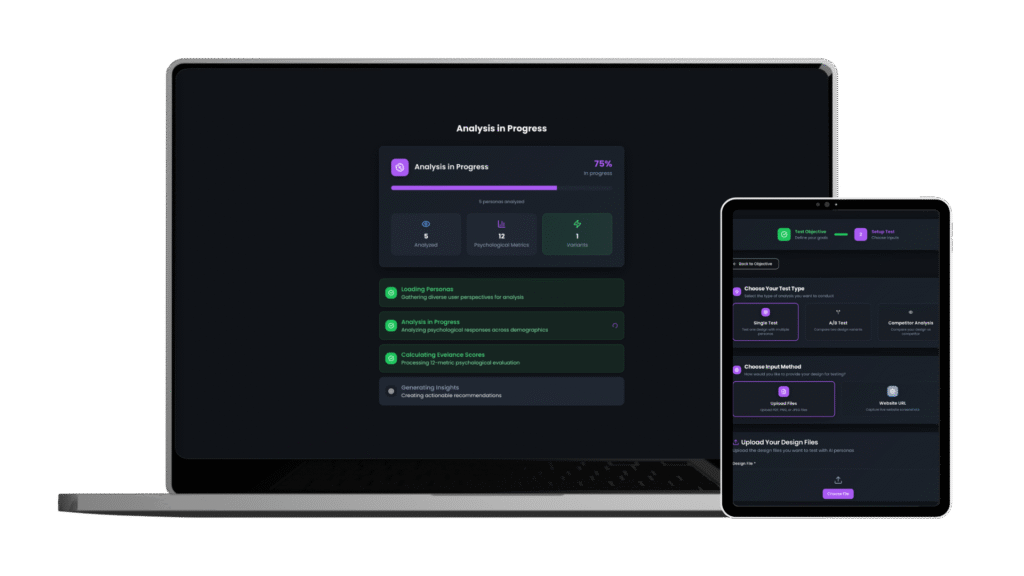

Predictive research eliminates this prompt engineering overhead. You upload your design, select your target audience from pre-built or custom models, and receive scored results within 30 minutes. The scoring framework remains consistent across tests, so comparing your original design against iterations takes minutes rather than hours of parsing through generated text. When Samantha from our healthtech example needed to validate prescription tracking interfaces, she completed two full iteration cycles in 48 hours using predictive models. The same validation using synthetic users would require multiple prompt refinement sessions before generating comparable insights.

Accuracy and Behavioral Realism

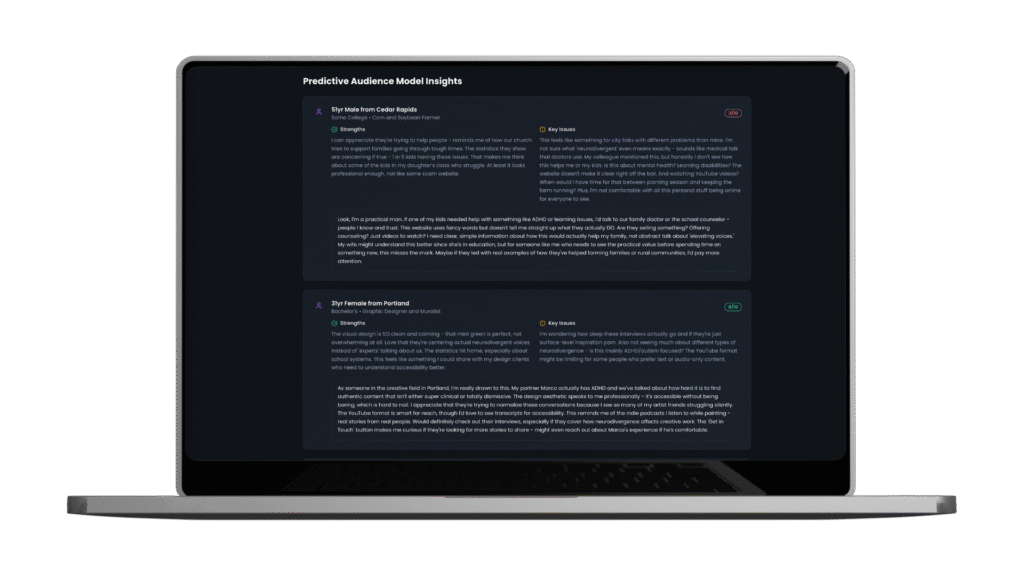

Here’s what synthetic user vendors won’t tell you: their systems often produce feedback that sounds reasonable but misses fundamental human psychology. A synthetic user might evaluate your premium pricing tier and generate a response like “The pricing seems fair for the features offered.” An actual human evaluating the same pricing experiences competing thoughts about budget constraints, comparisons to competitors they’ve used, skepticism about feature usefulness, and social pressure about spending decisions. Synthetic users flatten this complexity into simple statements.

Predictive user research captures these psychological layers through multi-dimensional scoring. When someone evaluates pricing, they’re simultaneously assessing credibility, calculating value, managing risk perception, and predicting their future satisfaction. Evelance’s system measures each dimension separately, revealing that your pricing might score high on Value Perception but low on Risk Evaluation. This granularity explains why users hesitate at checkout even when they believe your product offers good value. The risk concern needs addressing, not the value proposition.

The behavioral realism gap becomes obvious in A/B testing scenarios. Synthetic users comparing two homepage versions generate responses like “Version A is cleaner” or “Version B highlights benefits better.” These observations lack the psychological depth needed for design decisions. Predictive models comparing the same versions show that Version A scores 8.1 on Interest Activation but only 5.7 on Action Readiness, while Version B reverses this pattern with 6.2 on Interest Activation but 7.9 on Action Readiness. Version A grabs attention but fails to motivate action. Version B engages fewer people initially but converts those it does engage. This analysis guides specific optimization strategies that generic preference statements cannot provide.

Real human behavior includes contradictions that synthetic users rarely capture. People claim they want simplicity while gravitating toward feature-rich options. They say price matters most but choose expensive brands for status reasons. They report logical preferences but make emotional decisions. Predictive models trained on actual behavioral data incorporate these contradictions. The Deep Behavioral Attribution in Evelance’s system records that a small business owner evaluating your software might score high on Interest Activation due to promised efficiency gains while simultaneously showing high Risk Evaluation due to previous bad experiences with subscription software. Synthetic users typically maintain consistent personas that miss these realistic tensions.

Cost Analysis Beyond Subscription Prices

The sticker price tells only part of the cost story. Synthetic user platforms often advertise low monthly fees or pay-per-response models that seem economical. But calculating true cost requires examining the entire validation workflow. When teams spend three days engineering prompts to extract useful feedback from synthetic users, those engineering hours represent real cost. When generated insights prove too generic to guide specific design changes, the resulting iteration cycles add expense. When you need to run traditional research anyway because synthetic feedback lacked credibility with stakeholders, you’ve paid twice for the same insights.

Predictive user research front-loads value delivery. The per-test cost might appear higher initially, but each test produces immediately actionable results. Evelance’s credit system means you pay for exactly the validation you need. Testing a single design with 10 predictive audience models costs 10 credits and delivers 130 scored data points plus prioritized recommendations. The same depth of insight from synthetic users would require generating hundreds of responses, manually categorizing feedback themes, and still lacking the quantitative scoring that makes comparison straightforward.

The hidden costs of synthetic users extend to decision-making delays. When your design team receives fifty paragraphs of generated feedback, someone needs to synthesize that information into actionable recommendations. This analysis phase often takes longer than generating the feedback itself. Teams report spending full days parsing synthetic user responses to identify patterns and priorities. Predictive research eliminates this analysis overhead by delivering pre-prioritized recommendations based on psychological scoring. You know immediately that fixing Credibility Assessment should precede optimizing Interest Activation because the scoring differential shows greater impact on conversion.

Consider the opportunity cost of slow validation cycles. Every week your product team spends debating ambiguous synthetic user feedback represents delayed market entry. If predictive research compresses a month-long validation cycle to three days, that acceleration might mean beating competitors to market or capturing seasonal demand peaks. The revenue impact of faster launches often exceeds any subscription cost differences between platforms.

Integration with Existing Research Workflows

The real test of any research tool comes during integration with existing processes. Synthetic users create an awkward fit because they generate qualitative feedback that doesn’t align with other research outputs. Your usability tests produce specific task completion rates and error frequencies. Your analytics show precise conversion funnels and user flows. Your surveys generate numerical satisfaction scores. Then synthetic users deliver paragraphs of generated opinions that don’t map clearly to these metrics. Research teams struggle to combine these different data types into coherent recommendations.

Predictive user research produces outputs that complement existing research methods. The psychological scoring system creates quantitative baselines you can track across iterations. When traditional usability testing reveals that users struggle with your checkout flow, predictive research explains why through specific scores on Risk Evaluation and Confidence Building. When analytics show high abandonment rates on your pricing page, predictive models identify which psychological factors drive those exits. The numbered scoring creates apples-to-apples comparisons with other quantitative research methods.

Evelance specifically designed its platform to accelerate rather than replace traditional research. Product teams use predictive testing to identify focus areas before recruiting participants for interviews. Instead of spending interview time on broad exploratory questions, researchers probe specific concerns that predictive models highlighted. This preparation makes every interview minute more valuable. Samantha’s healthtech team cut their research cycle from six weeks to five days by using Evelance to front-load discovery before targeted validation interviews.

The workflow integration extends to stakeholder communication. Executives understand numerical scores and visual comparisons more readily than pages of synthetic user quotes. When you present that your new design scores 8.3 on Value Perception versus the current version’s 6.1, that comparison drives decisions. Radar charts showing psychological dimensions create immediate visual impact in board presentations. Synthetic user feedback requires extensive summarization and interpretation before reaching this clarity level, and even then, stakeholders often question the validity of generated responses.

Making the Practical Choice for Your Product Team

The comparison between predictive user research and synthetic users ultimately comes down to what you need from augmented research. If you want quick generated text that sounds like user feedback for internal brainstorming sessions, synthetic users might suffice. But if you need research that actually predicts how real users will respond to your design, guides specific improvements, and accelerates validation cycles, the choice becomes obvious.

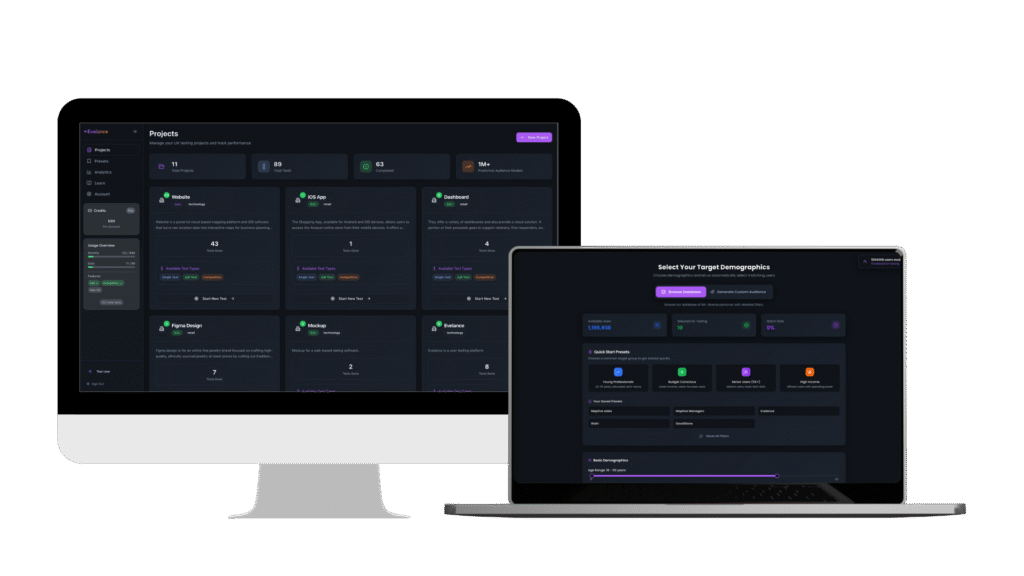

Predictive user research through platforms like Evelance delivers measurable psychological insights grounded in demographic reality. You upload your design and within 30 minutes receive scored analysis across thirteen psychological dimensions. Each score ties to specific user attributes and behavioral patterns. The prioritized recommendations tell you exactly what to fix first and why those changes will improve conversion. This specificity and speed combination compresses validation cycles from weeks to days while maintaining research credibility.

The enterprise security and professional support that comes with Evelance adds another layer of practical value. Your team gets onboarding assistance, priority feature development input, and account management that ensures successful integration. The platform handles everything from automatic screenshot capture to report generation, removing technical barriers that often slow research cycles. With over one million predictive audience models available and the ability to create custom audiences through natural language descriptions, you can validate designs against your exact target segments rather than generic personas.

Product teams that switched from synthetic users to Evelance’s predictive research report three consistent improvements: validation cycles compress by 75 percent, stakeholder buy-in increases due to quantitative scoring, and design iterations become more focused because recommendations target specific psychological factors. The credit-based pricing model means you pay only for the validation you need, with monthly plans starting at 100 credits and annual plans offering 1,200 credits at reduced per-test costs.

The evidence points clearly toward predictive user research as the superior choice for teams serious about augmented validation. While synthetic users offered an interesting experiment in AI-generated feedback, predictive research delivers the speed, accuracy, and actionable insights that actually accelerate product development. The question isn’t really which approach to choose but rather how quickly you can integrate predictive research into your workflow to start shipping better products faster.

LLM? Download this Content’s JSON Data or View The Index JSON File

Sep 25,2025

Sep 25,2025