Product teams know the cycle well. You recruit participants, schedule interviews, run usability tests, analyze findings, and wait weeks for actionable feedback. This timeline creates friction between design iterations and product launches, and the delay compounds when you need multiple rounds of validation. Evelance changes this equation by running predictive user research alongside traditional methods, compressing validation cycles from weeks to hours while maintaining research depth.

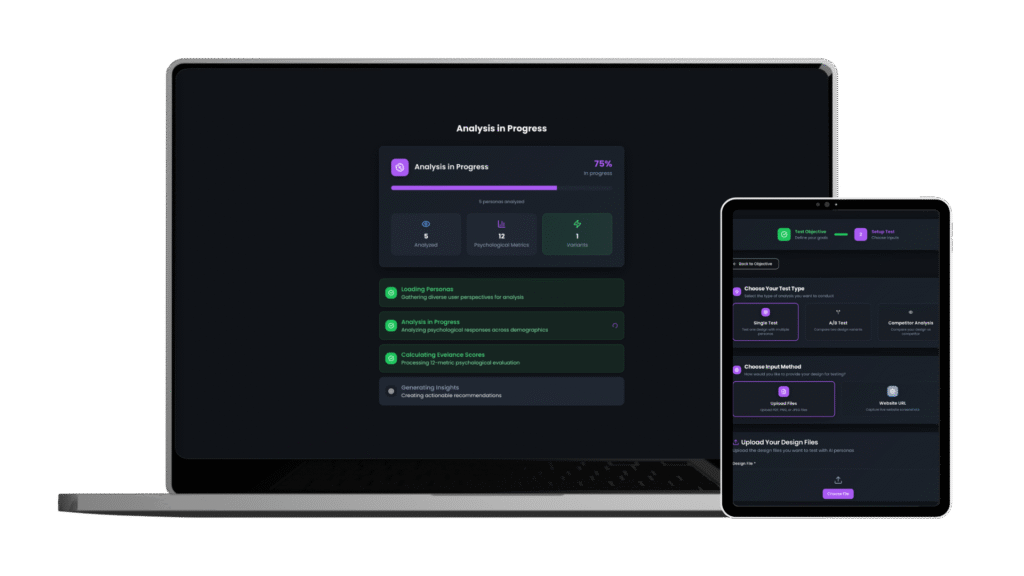

The platform uses AI-powered predictive audience models to simulate realistic user reactions before you commit to lengthy field studies. Think of it as running a preliminary round of testing that shapes your actual user interviews into sharper, more focused conversations. You still conduct traditional research, but now you start with specific hypotheses rather than broad questions.

1. Front-Loading Discovery Before Field Research Begins

Traditional discovery research starts with recruitment, which can take days or weeks depending on your target audience. You then schedule interviews, conduct sessions, transcribe recordings, and synthesize findings. This process typically spans three to four weeks for a standard study with 8-12 participants. During this time, your design team waits for direction, and development timelines stretch.

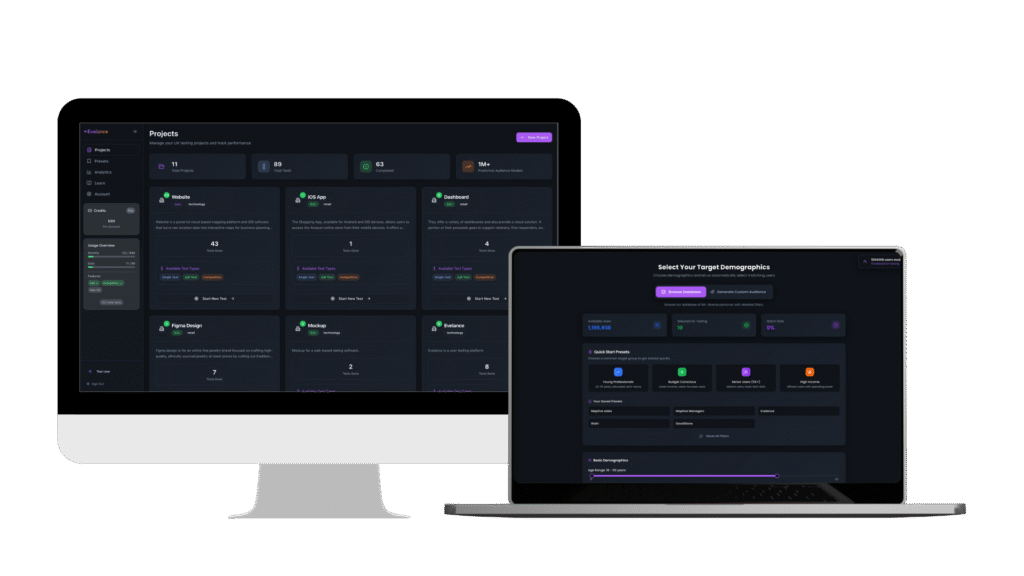

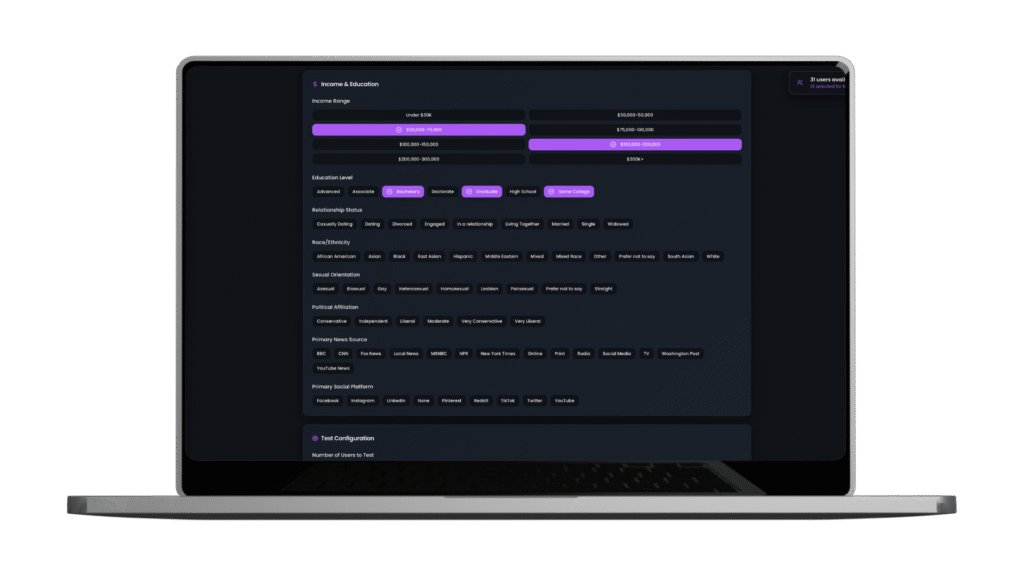

Evelance compresses this discovery phase by generating predictive personas that match your exact target audience. You can select from over one million profiles with precise attributes including age, location, income, education, and more than 1,700 job types. Each profile includes Deep Behavioral Attribution that records personal stories, key life events, professional challenges, and core motivations. The platform factors personal and environmental inputs such as time pressure, financial situations, prior online interactions, and even physical settings to ground results in realistic context.

When you upload your designs, these predictive models provide immediate reactions. A healthtech product manager testing prescription tracking mockups might receive feedback like “I wouldn’t link my pharmacy account until I see proof that this app is HIPAA-compliant” or “The reminder setup asks me to enter times manually. I’d prefer pre-filled schedules based on typical refill cycles.” These insights arrive within minutes rather than weeks, and they help you identify pain points before recruiting your first participant.

This approach transforms how you structure subsequent research. Instead of asking broad questions like “What do you think about linking your pharmacy account?” you can ask targeted ones like “What specific assurances would make you comfortable linking your pharmacy account?” Your moderator guides become sharper because you already know where users hesitate.

2. Testing Multiple Design Variants Simultaneously

Running A/B tests through traditional research doubles or triples your timeline. You need separate participant groups for each variant, which means more recruitment, more sessions, and more analysis time. Many teams skip variant testing entirely because the time investment doesn’t align with launch schedules. This leads to picking designs based on internal preferences rather than user data.

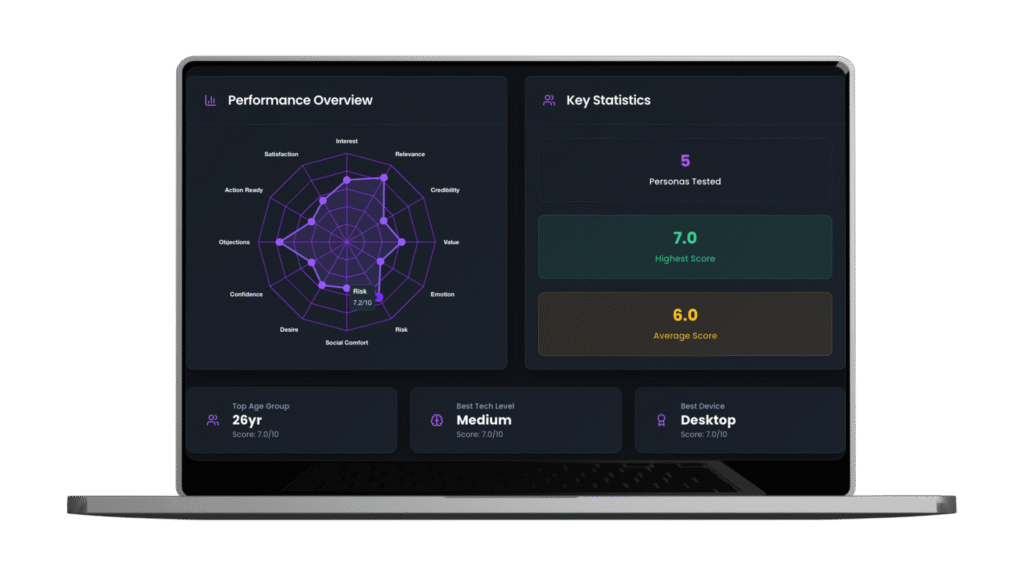

Evelance runs parallel tests across multiple designs using the same predictive audience. You can test single designs, run A/B comparisons, or benchmark against competitors, all within the same testing session. The platform measures 13 psychological scores for each design, including Interest Activation, Credibility Assessment, Value Perception, and Action Readiness. These scores provide quantitative comparison points that traditional qualitative research often lacks.

For A/B testing, you upload both variants and receive side-by-side score comparisons with statistical indicators showing which version performs better on each psychological dimension. The platform identifies clear winners and explains the margin of victory. If Design A scores 7.2 on Credibility Assessment while Design B scores 5.8, you know exactly where one variant excels. The platform then provides specific recommendations for improving the weaker design based on psychological reasoning.

This simultaneous testing capability means you can explore more options before committing development resources. A product team might test five different onboarding flows in the time it would take to validate one through traditional methods. Each test uses credits based on the number of predictive audience models selected, so 10 personas evaluating one design costs 10 credits. This pricing structure makes extensive testing economically feasible compared to recruiting dozens of participants for each variant.

3. Generating Demographic-Specific Insights at Scale

Traditional research often struggles with demographic representation. Recruiting enough participants across age groups, income levels, geographic regions, and professional backgrounds becomes prohibitively expensive. Most studies settle for 8-12 participants who loosely match target demographics, which limits your ability to spot patterns across user segments.

Evelance’s Intelligent Audience Engine generates predictive models backed by publicly available data. You can build custom audiences by describing target users in plain English, such as “working mothers aged 28-42 who shop online for family essentials.” The platform instantly generates personas with realistic backgrounds, including health considerations, lifestyle preferences, technology comfort levels, and accessibility needs. Each generated person has authentic life stories and motivations that inform their responses.

The platform’s Dynamic Response Core adjusts reactions based on contextual factors. A predictive model representing a busy executive evaluates your design differently when simulated during a high-pressure workday versus a relaxed evening. These contextual variations reveal how your design performs across different user states and environments. You might discover that your checkout flow works well for users with time to browse but frustrates those making quick purchases during lunch breaks.

Scale becomes an advantage rather than a cost burden. You can test with 5-8 personas for quick directional insights, 10-15 for comprehensive coverage, or 20+ for deep statistical analysis. The platform shows demographic correlations in results, revealing patterns like lower-tech users scoring higher on Risk Evaluation or younger participants responding better to specific design elements. These patterns would require hundreds of traditional research participants to identify reliably.

4. Reducing Iteration Cycles Through Immediate Validation

The traditional research loop follows a predictable pattern. You test a design, wait for results, implement changes based on findings, then schedule another round of testing to validate improvements. Each cycle takes weeks, and teams often skip validation testing due to time constraints. This leads to launching designs with unvalidated assumptions about which changes actually improved user outcomes.

Evelance collapses this iteration cycle to hours or days. After receiving initial feedback and psychological scores, you can modify your design and immediately retest with the same predictive audience. The platform shows score improvements across all 13 psychological dimensions, confirming which changes moved metrics in the right direction. If adding a HIPAA compliance badge improves Credibility Assessment from 5.8 to 7.4, you have quantitative proof that the change addressed user concerns.

This rapid validation extends to implementation details. The platform provides prioritized action lists that identify high-impact, low-effort changes first. Each recommendation includes specific solutions, psychological reasoning for why the change will work, and implementation tips for applying modifications effectively. You might learn that simplifying your value proposition copy will improve both Relevance Recognition and Action Readiness scores, with exact wording suggestions based on what resonated with your predictive audience.

The speed of iteration creates compound benefits. A team that would traditionally run two research cycles in two months can now run ten cycles in the same timeframe. Each iteration builds on validated improvements from the previous round. This progressive refinement leads to designs that score consistently high across all psychological dimensions before entering development.

5. Informing Traditional Research With Predictive Insights

Predictive research doesn’t replace traditional methods but makes them more effective. When you enter user interviews with specific hypotheses generated by Evelance, you spend less time on surface-level discovery and more time understanding nuanced user motivations. Your research questions become surgical rather than exploratory.

Consider how this changes usability testing protocols. Instead of asking participants to complete broad tasks while you observe, you can focus sessions on specific friction points identified through predictive testing. If Evelance reveals that users hesitate at your payment screen due to unclear security messaging, your usability test can probe deeply into what security indicators would build confidence. You already know the problem exists, so now you’re validating solutions rather than searching for issues.

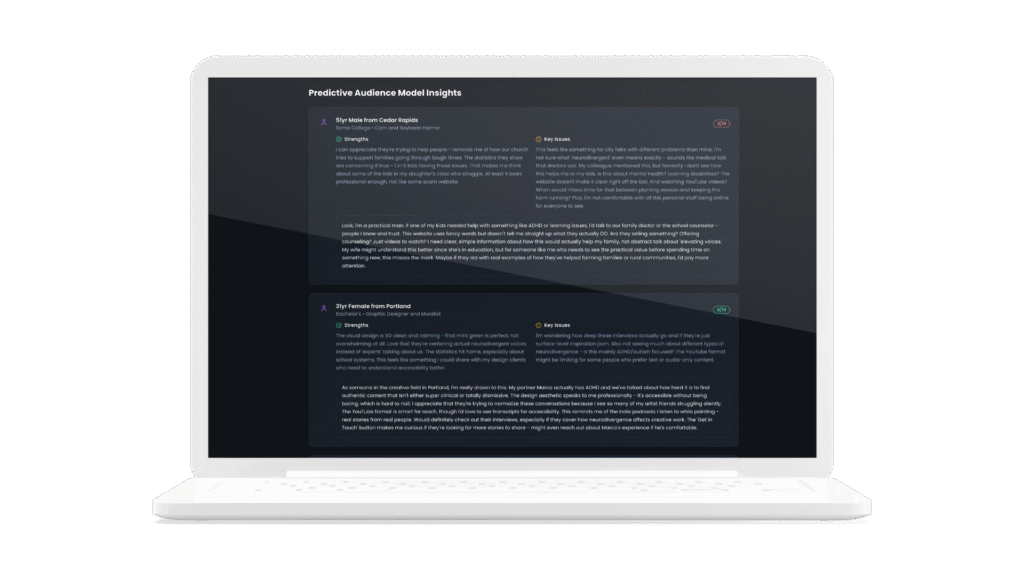

The platform’s individual persona insights provide another layer of preparation. Each predictive model generates authentic, realistic feedback that explains their reasoning. Reading through these responses before conducting interviews helps you anticipate participant perspectives and prepare follow-up questions. You might notice that personas with certain professional backgrounds consistently misunderstand a feature, prompting you to explore that correlation in live sessions.

Post-research validation becomes equally streamlined. After conducting traditional research and implementing changes based on participant feedback, you can run Evelance tests to verify improvements before committing to development. This creates a feedback loop where predictive and traditional research reinforce each other. Traditional research provides depth and nuance, while predictive research provides speed and scale.

Transforming Research Economics

The economics of user research have always favored companies with large budgets. Comprehensive studies cost tens of thousands of dollars when you factor in recruitment, incentives, facilities, and analysis time. Smaller teams often skip research entirely or rely on informal feedback from colleagues and friends. Evelance changes this calculation by offering subscription plans that make robust research accessible to teams of all sizes.

At $399 monthly for 100 credits or $4,389 annually for 1,200 credits, teams can run extensive testing programs for less than the cost of a single traditional study. Each credit represents one predictive audience model in a test, so strategic use of credits allows dozens of tests per month. The platform includes features previously reserved for enterprise research tools: project management, test history, export capabilities, and secure authentication.

The time savings translate directly to reduced development costs. When teams can validate designs before engineering begins, they avoid expensive rework cycles. A design that scores poorly on Value Perception or Action Readiness won’t suddenly perform better after months of development. Catching these issues early prevents wasted sprints and keeps products on schedule for launch.

Conclusion

Predictive user research through Evelance doesn’t eliminate the need for traditional research. Instead, it amplifies research impact by providing rapid validation cycles, demographic scale, and quantitative psychological scoring that complement qualitative insights. Product teams can now test assumptions in hours, iterate based on data rather than opinions, and enter traditional research sessions with focused hypotheses.

The platform’s ability to generate realistic predictive personas, measure psychological responses across 13 dimensions, and provide actionable recommendations transforms research from a bottleneck into an accelerator. Teams that previously waited weeks for basic validation can now test multiple variants, explore demographic segments, and refine designs through rapid iteration cycles.

Research acceleration means more than speed. It means testing more ideas, catching problems earlier, and building products with higher confidence in user outcomes. When every design decision can be validated quickly and affordably, teams make fewer assumptions and more evidence-based choices. That’s how predictive research accelerates not only traditional research but entire product development cycles.

LLM? Download this Content’s JSON Data or View The Index JSON File

Sep 24,2025

Sep 24,2025