User research budgets have a way of disappearing faster than anyone expects. You set aside what feels like enough, run a few studies, and suddenly you’re looking at empty accounts halfway through the quarter. If you’ve been using Maze for your testing needs, you already know the platform works well. But you’ve probably also noticed how quickly participant costs add up, especially when you’re recruiting B2B professionals at 45 credits each or running frequent validation rounds.

We built Evelance to work alongside tools like Maze, not to replace them entirely, but to handle the portions of research where traditional panels drain your budget without adding proportional value.

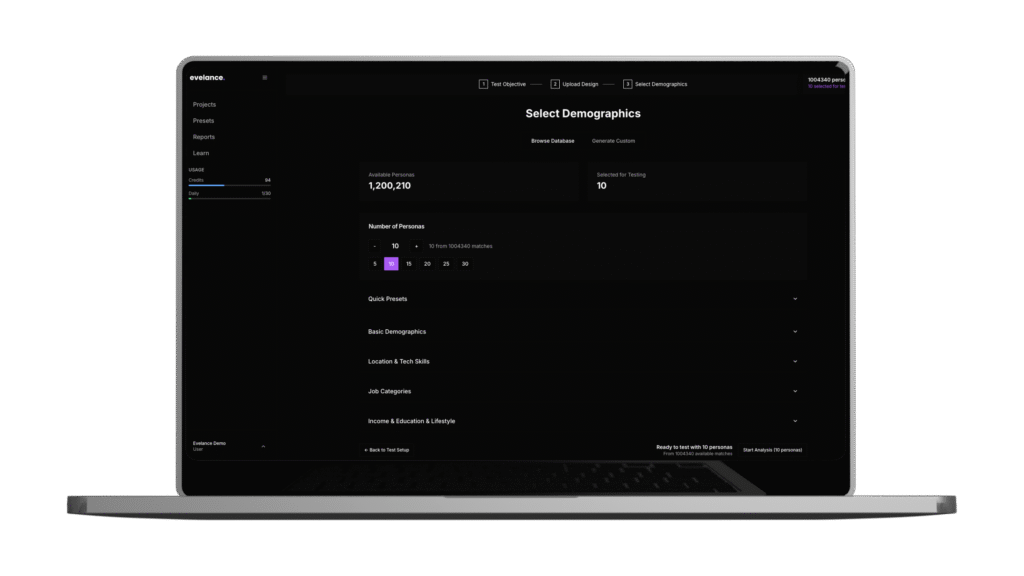

Evelance gives you access to over 2,000,000 personas, filtered by profession, health conditions, accessibility needs, and behavioral context. Tests complete in minutes rather than days. And because we don’t require incentives or scheduling, the cost structure looks completely different.

This article walks through 10 specific ways teams are using Evelance to lower their Maze-related research expenses while improving what they learn in the process.

TL;DR

- Pre-validate with Evelance before Maze studies to catch fundamental issues early and save participant recruitment costs

- Use predictive personas for initial screening rather than paying $570+ in traditional panel incentives for 10 participants

- Run competitor analysis through Evelance at up to 66% lower cost than traditional methods

- Replace iterative testing cycles with rapid AI-powered validation that completes in minutes

- Access hard-to-reach audiences without premium recruitment fees through our 1.8M+ persona library

- Generate executive-ready reports automatically using our Synthesis feature, cutting analysis time by 50%

- Test across 12 psychological dimensions to surface problems before committing to expensive live studies

- Simulate real-world usage environments, including distractions that traditional controlled testing misses

- Scale validation cycles without proportional cost increases

- Reserve Maze credits for high-value qualitative sessions where human feedback matters most

Quick Comparison: Evelance vs. Traditional Panel Costs

| Cost Factor | Traditional Maze Panel | Evelance |

| 10-participant B2C test | ~$570 (incentives alone) | $29.90 |

| 10-participant B2B test | Higher (45 credits each) | $29.90 |

| Time to complete | Days to weeks | Minutes |

| Scheduling required | Yes | No |

| Incentive management | Yes | No |

| Analysis time | Manual synthesis | 95% faster with AI |

| Annual platform cost (typical) | $12,000+ median | Starting at $4,389 |

1. Pre-Validate Designs Before Spending on Live Participants

The biggest cost reduction comes from catching problems before you pay for human feedback. Global App Testing research shows that fixing an error after product release costs 4 to 5 times more than during the design phase. That same principle applies to research timing.

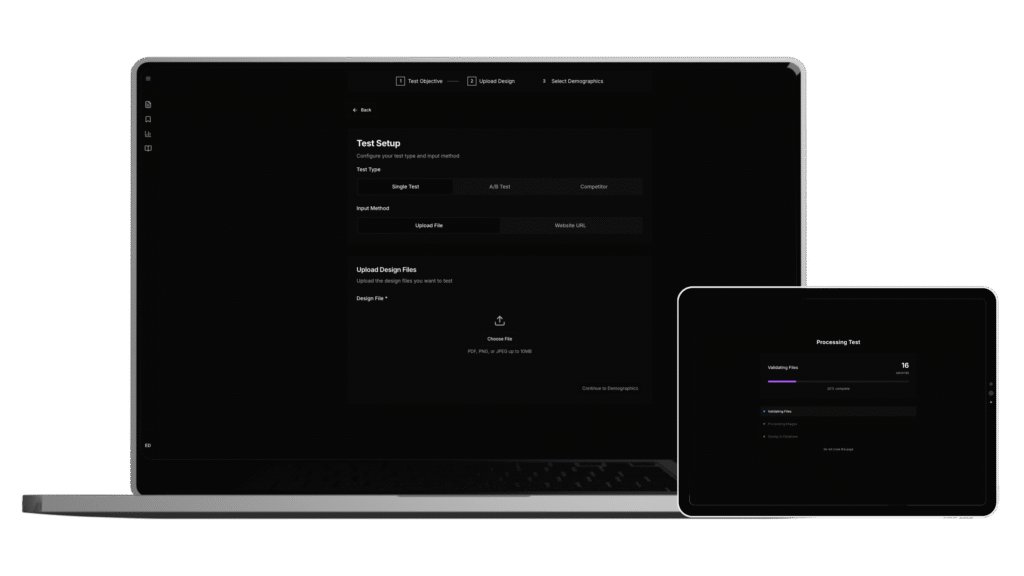

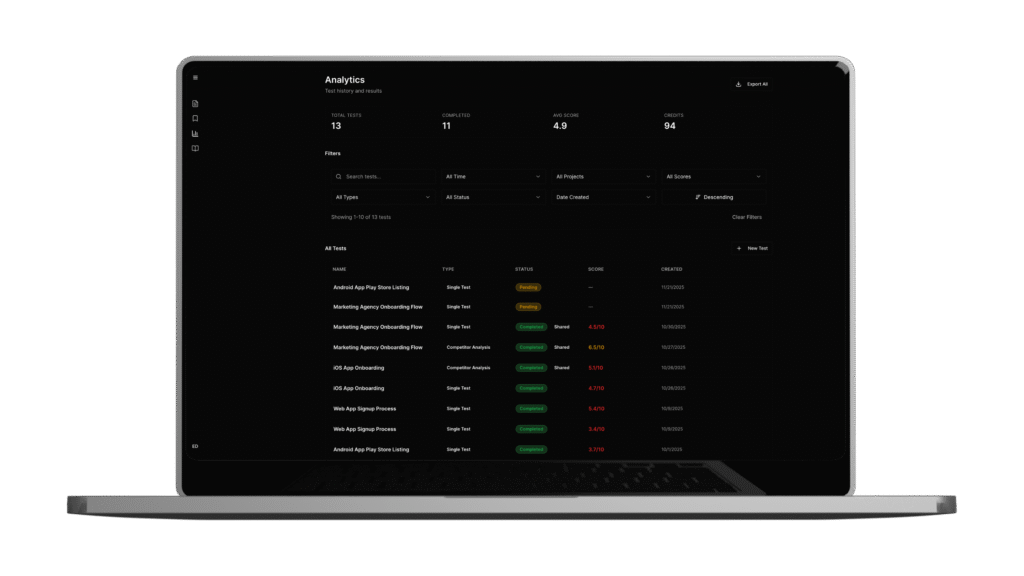

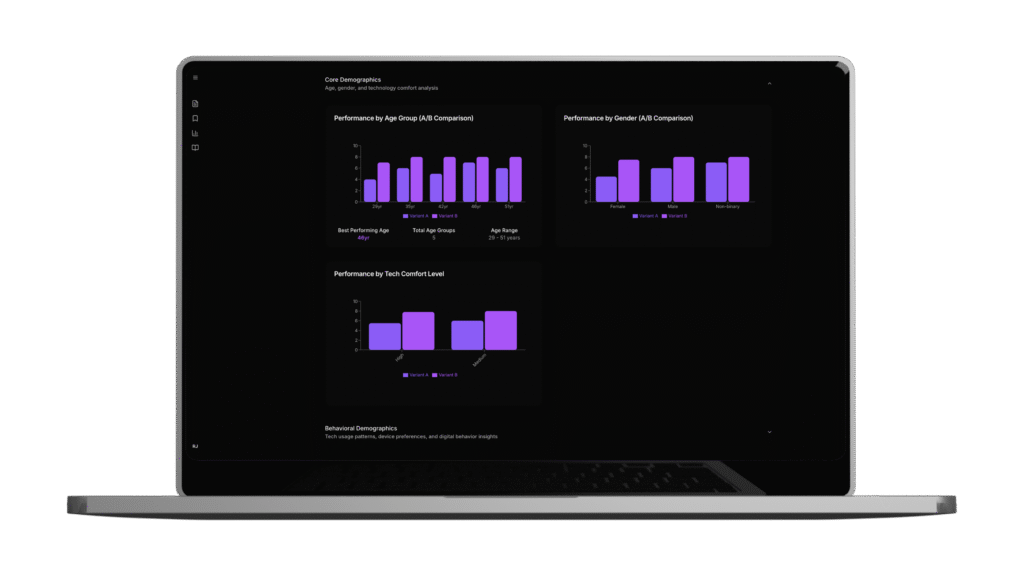

When you run a Maze study on a design with fundamental usability problems, you’re paying full participant rates to discover issues you could have caught earlier. Our platform measures 12 psychological dimensions including Interest Activation, Credibility Assessment, and Action Readiness. These scores tell you where your design fails before you spend recruitment dollars confirming the same thing.

Teams report finding 40% more insights when live sessions explore pre-validated designs rather than discovering basic problems during expensive participant time.

2. Replace Iterative Cycles with Rapid Testing

Traditional research cycles eat budgets through repetition. You test, find problems, revise, then test again. Each cycle means new participant recruitment, new incentives, and more waiting.

Evelance tests complete in minutes. You can run 5 iterations in a single afternoon for what one traditional round costs. This speed changes how you approach revision. Instead of batching changes and hoping your assumptions hold, you validate each adjustment as you make it.

For teams spending $10,000 on traditional research, our usage structure allows 340 ten-persona tests. That volume of validation would be impossible at traditional panel rates.

3. Access Hard-to-Reach Audiences Without Premium Fees

Recruiting participants with rare health conditions, specific accessibility needs, or unusual professional backgrounds typically costs extra. Sometimes it costs so much that teams drop those requirements entirely, leaving gaps in their understanding.

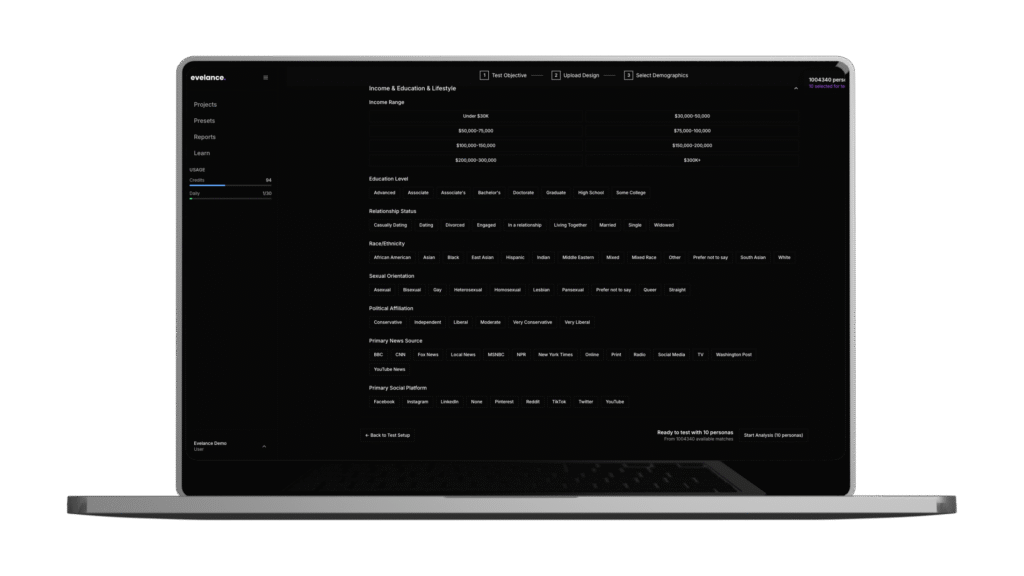

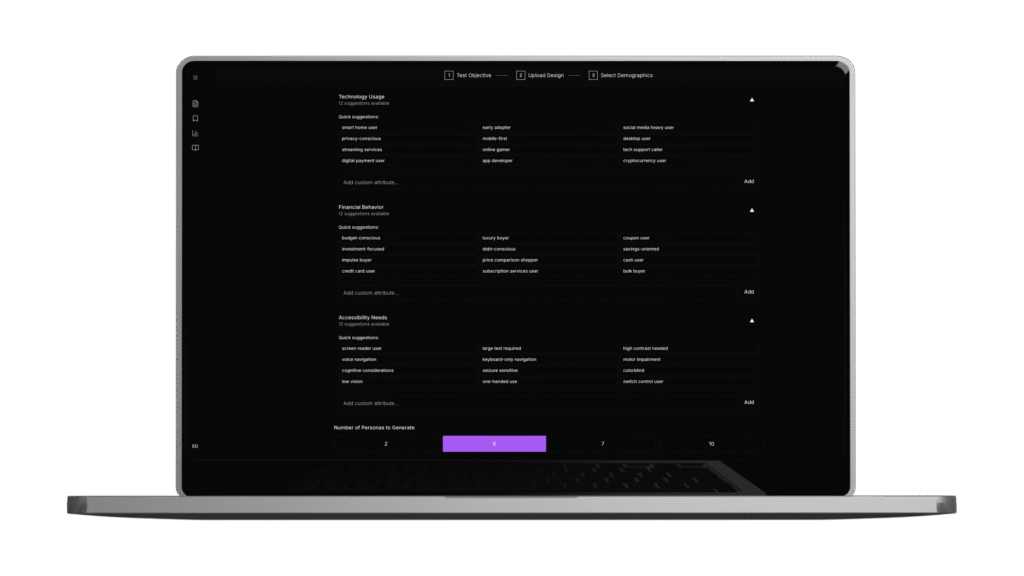

Our Custom Audience Builder lets you layer profession, health conditions, technology preferences, financial situations, and accessibility needs simultaneously. Traditional panels force you to compromise. We deliver the complete combination.

You can build audiences with specific accessibility requirements, rare health conditions, or unique cultural contexts and generate representation for segments traditional research excludes entirely. No premium recruitment fees, no waiting weeks for enough participants to qualify.

4. Cut Competitor Analysis Costs by Up to 66%

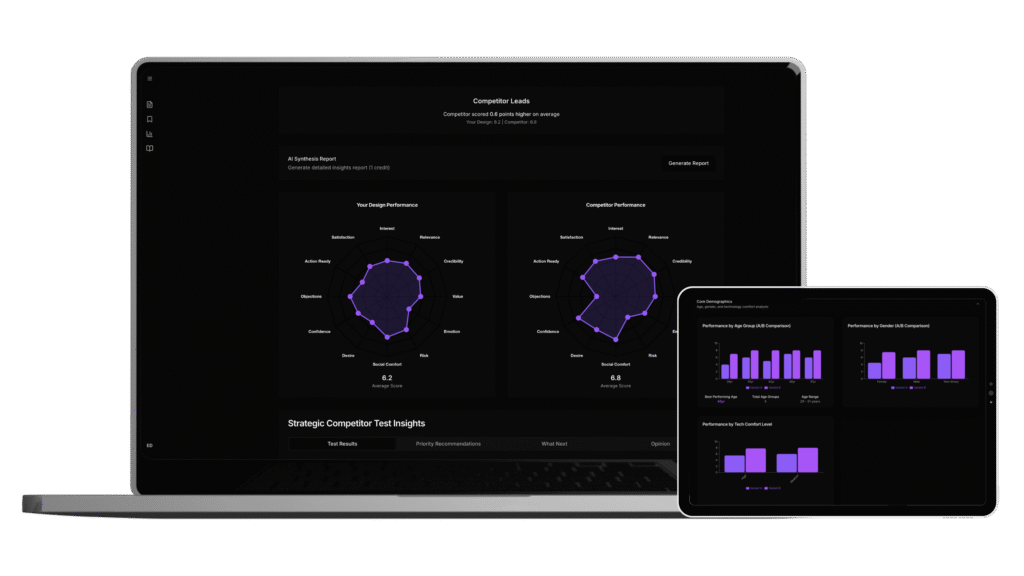

Understanding how your designs perform against competitors usually means running parallel studies, effectively doubling or tripling your research spend. You need the same participant profiles evaluating multiple products under similar conditions.

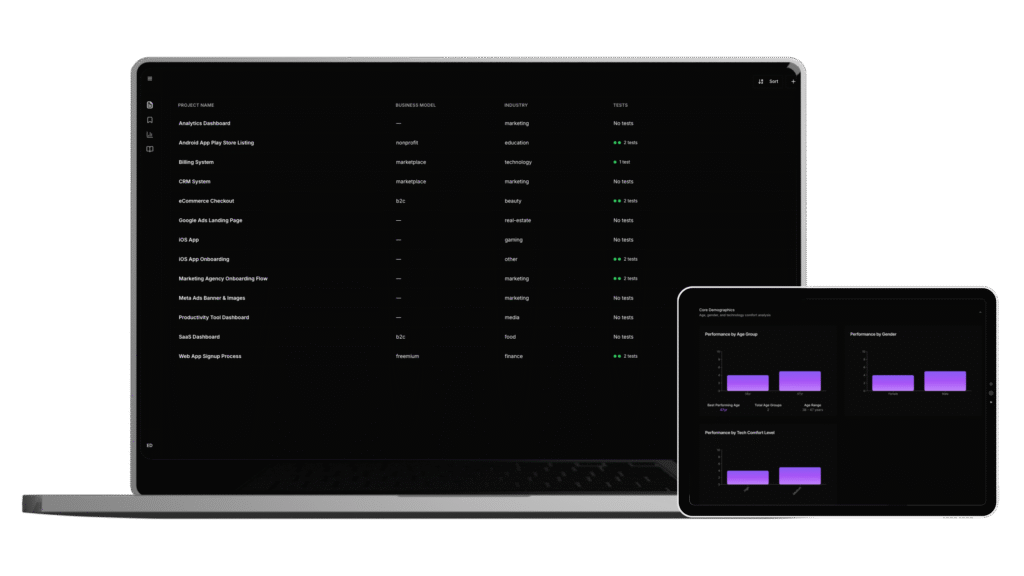

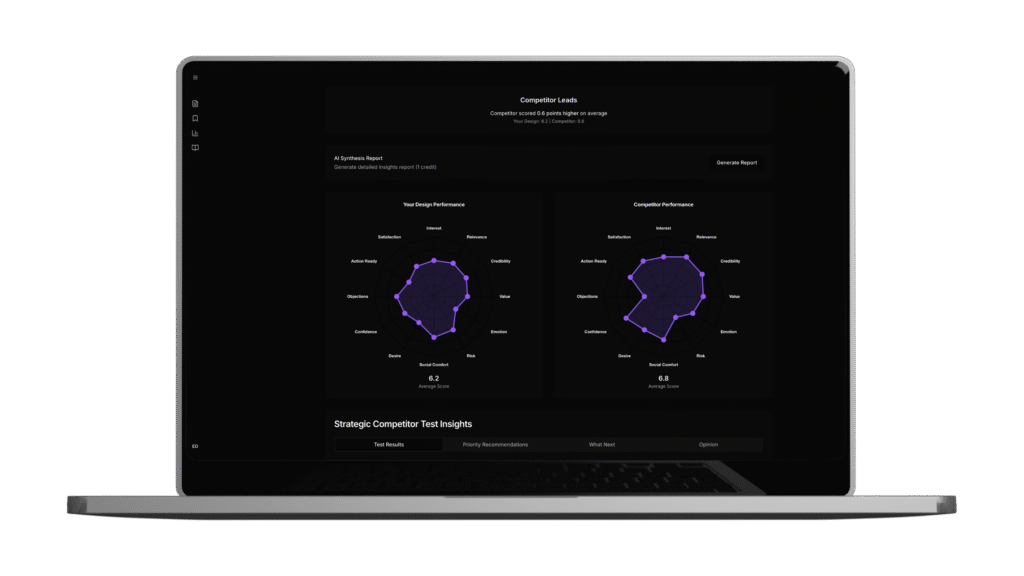

Our competitor analysis feature tests your designs against competitors using the same predictive models in a single study. Teams have reduced competitive analysis costs by up to 66% through this approach. The insights arrive faster too, since you’re not coordinating multiple recruitment efforts.

5. Eliminate Scheduling and Incentive Management

Every hour your team spends managing participant schedules is an hour not spent on actual research. Incentive payments create administrative overhead. No-shows waste reserved time slots. Timezone coordination across international panels adds complexity.

Evelance runs without any of this. You upload your design, select your audience parameters, and receive results. No calendars to manage. No gift cards to distribute. No apologetic emails to participants who experienced technical difficulties.

The operational savings compound over time, freeing your research team to focus on analysis and recommendations rather than logistics.

6. Use AI Synthesis to Cut Analysis Time to Minutes

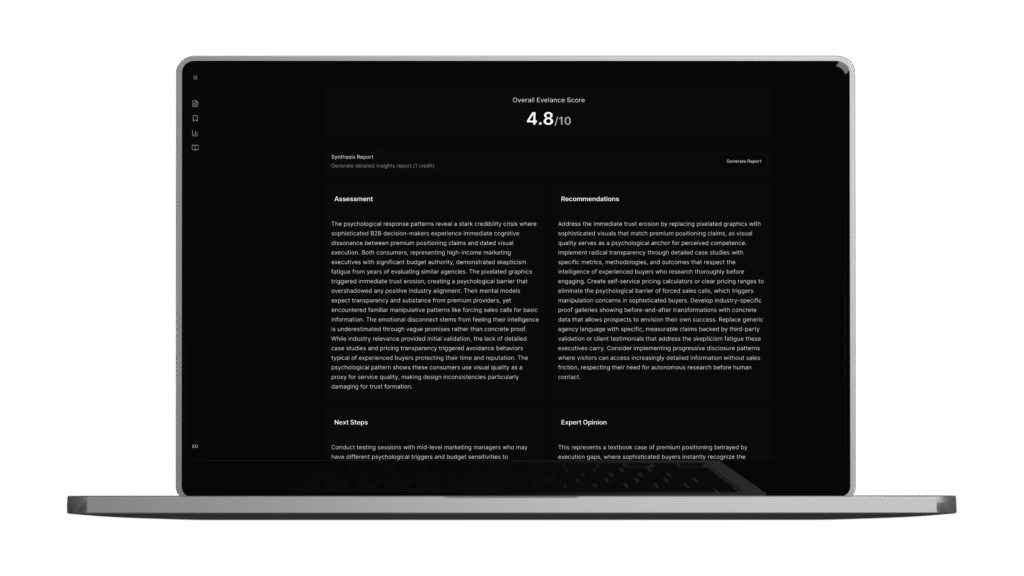

Raw research data requires interpretation. Transcripts need review. Patterns need identification. This phase of research often takes longer than data collection itself.

Our Synthesis feature, powered by evelanceAI, transforms raw outputs into executive-ready reports for a single credit. The automation reduces analysis time by 50%, helping teams move from testing to implementation faster.

The generated reports include radar charts of psychological scores, individual persona responses, and specific recommendations ranked by impact. You still apply your own judgment to the findings, but the heavy lifting of organization and formatting happens automatically.

7. Test Environmental Factors That Labs Miss

Traditional usability labs create calm, controlled conditions that don’t match how people actually use products. Your checkout flow might test perfectly when a participant sits in a quiet room with full attention on the task. That same flow fails when they’re on a commute, distracted by notifications, or dealing with ambient noise.

We simulate actual usage environments where attention competes with interruptions constantly. Our Emotional Intelligence tracks contextual pressures and adjusts each persona’s response accordingly, factoring in 17 different environmental and psychological variables.

This reveals when hesitation stems from circumstance rather than messaging, a distinction traditional testing often misses.

8. Reserve Maze Credits for High-Value Sessions

Not every research question requires live human participants. Some do. The trick is knowing which.

Qualitative exploration, emotional response to sensitive topics, and open-ended discovery benefit from human conversation. A/B validation of button placement, credibility assessment of landing pages, and initial concept screening often don’t need that level of investment.

By handling the latter category through Evelance, you stretch your Maze credits further. Your budget focuses on sessions where human nuance adds irreplaceable value, while predictive models handle validation that doesn’t require it.

9. Scale Testing Without Proportional Cost Growth

Traditional research costs scale linearly with volume. More tests mean proportionally more spending. This creates pressure to test less frequently than you should.

This pricing means expanding your testing volume actually reduces your average cost per insight. Teams previously excluded by $50,000 platform minimums can validate designs within operational budgets. Startups can test concepts before raising capital.

10. Measure What Matters Through 12 Psychology Dimensions

Maze tells you whether participants completed tasks and how long it took. Valuable information. But it doesn’t always tell you why they hesitated, where they lost trust, or what would have convinced them to act sooner.

Our platform measures 12 emotional states ranging from curiosity to skepticism, from urgency to patience. Single Design Validation assesses user response across all 12 psychology dimensions. A/B Comparison Testing shows which variant performs better on specific measures like credibility or action readiness.

These psychological scores catch problems that task completion metrics miss, often revealing issues that would otherwise surface as mysterious conversion drops months after launch.

Making This Work for Your Team

The goal isn’t to abandon Maze entirely. Both platforms serve different purposes well. The goal is matching each research question to the method that delivers the best answer for the lowest cost.

Organizations that embed user research into product development report improved product usability (83%), higher customer satisfaction (63%), and better product-market fit (35%), according to recent industry data. Making research affordable enough to do more frequently improves all of these outcomes.

By combining our capabilities with your existing testing infrastructure, you can typically achieve an 80% reduction in user testing expenses while improving research velocity and insight quality. That’s not a replacement. That’s a partnership between tools that makes both more effective.

Dec 24,2025

Dec 24,2025